Tzu-Mi Lin, Yu-Hsin Wu and Lung-Hao Lee.

In Proceedings of the Working Notes of CLEF 2024 – Conference and Labs of the Evaluation Forum.

Abstract

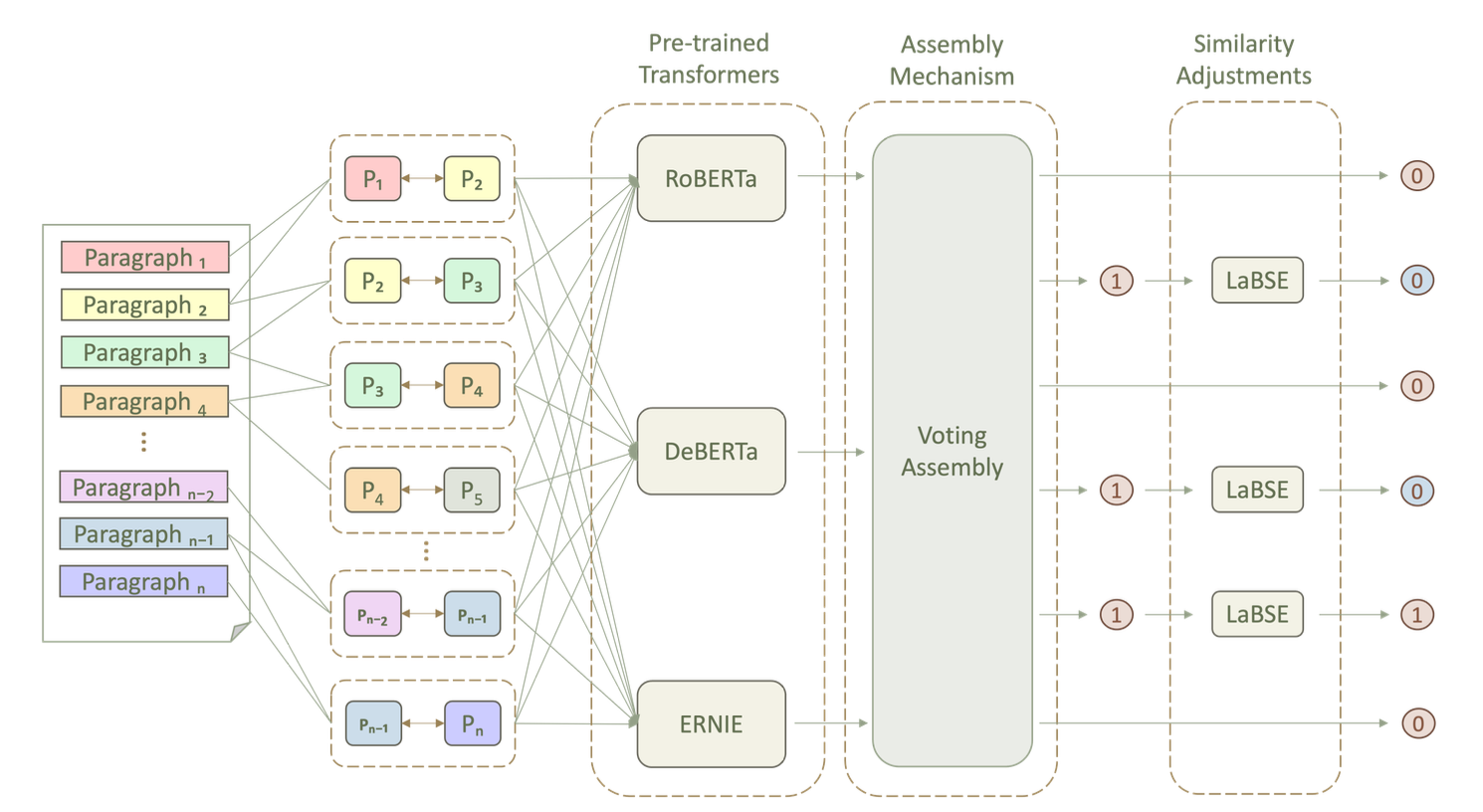

This paper describes our NYCU-NLP system design for multi-author writing style analysis tasks of the PAN Lab at CLEF 2024. We propose a unified architecture integrating transformer-based models with similarity adjustments to identify author switches within a given multi-author document. We first fine-tune the RoBERTa, DeBERTa and ERNIE transformers to detect differences in writing style in two given paragraphs. The output prediction is then determined by the ensemble mechanism. We also use similarity adjustments to further enhance multi-author analysis performance. The experimental data contains three difficulty levels to reflect simultaneous changes of authorship and topic. Our submission achieved a macro F1-score of 0.964, 0.857 and 0.863 respectively for the easy, medium and hard levels, ranking first and second, respectively for hard and medium levels out of 16 and 17 participating teams.